Software is normally developed in a very precise, deterministic way. The behaviour of a computer is governed by strict logical rules. A computer invariably does exactly what it is told to do.

When writing a program to solve a particular problem, software developers will identify the necessary sub-tasks that the program must perform. Algorithms are chosen and implemented for each task. The completed program becomes a detailed specification of exactly how to get from A to B. Every aspect is carefully designed by its developers who must understand how the various components interact to deliver the program’s functionality.

This prescriptive approach to solving problems with computers has served us well and is responsible for most of the software applications that we use today. However, it is not without limitations. Solutions to problems are constrained by the intuition, knowledge and prejudices of those who develop the software. The programmers have to know exactly how to solve the problem.

Another characteristic of the prescriptive approach that is sometimes problematic is that it is best suited to finding exact answers. Not all problems have exact solutions, and some that do may be too computationally expensive to solve. Sometimes it is more useful to be able to find an approximate answer quickly than to waste time searching for a better solution.

What are Evolutionary Algorithms?

Evolutionary algorithms (EAs) are inspired by the biological model of evolution and natural selection first proposed by Charles Darwin in 1859. In the natural world, evolution helps species adapt to their environments. Environmental factors that influence the survival prospects of an organism include climate, availability of food and the dangers of predators.

Species change over the course of many generations. Mutations occur randomly. Some mutations will be advantageous, but many will be useless or detrimental. Progress comes from the feedback provided by non-random natural selection. For example, organisms that can survive for long periods without water will be more likely to thrive in dry conditions than those that can’t. Likewise, animals that can run fast will be more successful at evading predators than their slower rivals. If a random genetic modification helps an organism to survive and to reproduce, that modification will itself survive and spread throughout the population, via the organism’s offspring.

Evolutionary algorithms are based on a simplified model of this biological evolution. To solve a particular problem we create an environment in which potential solutions can evolve. The environment is shaped by the parameters of the problem and encourages the evolution of good solutions.

The field of Evolutionary Computation encompasses several types of evolutionary algorithm. These include Genetic Algorithms (GAs), Evolution Strategies, Genetic Programming (GP), Evolutionary Programming and Learning Classifier Systems.

The most common type of evolutionary algorithm is the generational genetic algorithm. The basic outline of a generational GA is as follows (most other EA variants are broadly similar). A population of candidate solutions is iteratively evolved over many generations. Mimicking the concept of natural selection in biology, the survival of candidates (or their offspring) from generation to generation in an EA is governed by a fitness function that evaluates each candidate according to how close it is to the desired outcome, and a selection strategy that favours the better solutions. Over time, the quality of the solutions in the population should improve. If the program is successful, we can terminate the evolution once it has found a solution that is good enough.

An Example

Now that we have introduced the basic concepts and terminology, I will attempt to illustrate by way of an example. Suppose that we want to use evolution to generate a particular character string, for example “HELLO WORLD”. This is a contrived example in as much as it assumes that we don’t know how to create such a string and that evolution is the best approach available to us. However, bear with me as this simple example is useful for demonstrating exactly how the evolutionary approach works.

Each candidate solution in our population will be a string. We’ll use a fixed-length representation so that each string is 11 characters long. Each character in a string will be one of the 27 valid characters (the upper case letters ‘A’ to ‘Z’ plus the space character).

For the fitness function we’ll use the simple approach of assigning a candidate solution one point for each position in the string that has the correct character. For the string “HELLO WORLD” this gives a maximum possible fitness score of 11 (the length of the string).

The first task for the evolutionary algorithm is to randomly generate the initial population. We can use any size population that we choose. Typical EA population sizes can vary from tens to thousands of individuals. For this example we will use a population size of 10. After the initialisation of the population we might have the following candidates (fitness scores in brackets):

1. GERZUNFXCEN (1)

2. HSFDAHDMUYZ (1)

3. UQ IGARHGJN (0)

4. ZASIB WSUVP (2)

5. XIXROIUAZBH (1)

6. VDLGCWMBFYA (1)

7. SY YUHYRSEE (0)

8. EUSVBIVFHFK (0)

9. HHENRFZAMZH (1)

10. UJBBDFZPLCN (0)

None of these candidate solutions is particularly good. The best (number 4) has just two characters out of eleven that match the target string (the space character and the ‘W’).

The next step is to select candidates based on their fitness and use them to create a new generation. One technique for favouring the selection of fitter candidates over weaker candidates is to assign each candidate a selection probability proportionate to its fitness.

If we use fitness-proportionate selection, none of the candidates with zero fitness will be selected and the candidate with a fitness of 2 is twice as likely to be selected as any of the candidates with a fitness of 1. For the next step we need to select 10 parents, so it is obvious that some of the fit candidates are going to be selected multiple times.

Now that we have some parents, we can breed the next generation. We do this via a process called cross-over, which is analogous to sexual reproduction in biology. For each pair of parents, a cross-over point is selected randomly. Assuming that the first two randomly selected parents are numbers 2 and 4, if the cross-over occurs after the first four characters, we will get the following offspring:

Parent 1: HSFDAHDMUYZ

Parent 2: ZASIB WSUVP

Offspring 1: HSFDB WSUVP

Offspring 2: ZASIAHDMUYZ

This recombination has given us two new candidates for the next generation, one of which is better than either of the parents (offspring 1 has a fitness score of 3). This shows how cross-over can lead towards better solutions. However, looking at the initial population as a whole, we can see that no combination of cross-overs will ever result in a candidate with a fitness higher than 6. This is because, among all 10 original candidates, there are only 6 positions in which we have the correct character.

This can be mitigated to some extent by increasing the size of the population. With 100 individuals in the initial population we would be much more likely to have the necessary building blocks for a perfect solution, but there is no guarantee. This is where mutation comes in.

Mutation is implemented by modifying each character in a string according to some small probability, say 0.02 or 0.05. This means that any single individual will be changed only slightly by mutation, or perhaps not at all.

By applying mutation to each of the offspring produced by cross-over, we will occasionally introduce correct characters in new positions. We will also occasionally remove correct characters but these bad mutations are unlikely to survive selection in the next generation, so this is not a big problem. Advantageous mutations will be propagated by cross-over and selection and will quickly spread throughout the population.

After repeating this process for dozens or perhaps even hundreds of generations we will eventually converge on our desired solution.

This is a convoluted process for finding a string that we already knew to start with. However, as we shall see later, the evolutionary approach generalises to deal with problems where we don’t know what the best solution is and therefore can’t encode that knowledge in our fitness function.

The important point demonstrated by this example is that we can arrive at a satisfactory solution without having to enumerate every possible candidate in the search space. Even for this trivial example, a brute force search would involve generating and checking approximately 5.6 quadrillion strings.

The Outline of an Evolutionary Algorithm

- Genesis – Create an initial set (population) of n candidate solutions. This may be done entirely randomly or the population may be seeded with some hand-picked candidates.

- Evaluation – Evaluate each member of the population using some fitness function.

- Survival of the Fittest – Select a number of members of the evaluated population, favouring those with higher fitness scores. These will be the parents of the next generation.

- Evolution – Generate a new population of offspring by randomly altering and/or combining elements of the parent candidates. The evolution is performed by one or more evolutionary operators. The most common operators are cross-over and mutation. Cross-over takes two parents, cuts them each into two or more pieces and recombines the pieces to create two new offspring. Mutation copies an individual but with small, random modifications (such as flipping a bit from zero to one).

- Iteration – Repeat steps 2-4 until a satisfactory solution is found or some other termination condition is met (such as the number of generations or elapsed time).

When are Evolutionary Algorithms Useful?

Evolutionary algorithms are typically used to provide good approximate solutions to problems that cannot be solved easily using other techniques. Many optimisation problems fall into this category. It may be too computationally-intensive to find an exact solution but sometimes a near-optimal solution is sufficient. In these situations evolutionary techniques can be effective. Due to their random nature, evolutionary algorithms are never guaranteed to find an optimal solution for any problem, but they will often find a good solution if one exists.

One example of this kind of optimisation problem is the challenge of timetabling. Schools and universities must arrange room and staff allocations to suit the needs of their curriculum. There are several constraints that must be satisfied. A member of staff can only be in one place at a time, they can only teach classes that are in their area of expertise, rooms cannot host lessons if they are already occupied, and classes must not clash with other classes taken by the same students. This is a combinatorial problem and known to be NP-Hard. It is not feasible to exhaustively search for the optimal timetable due to the huge amount of computation involved. Instead, heuristics must be used. Genetic algorithms have proven to be a successful way of generating satisfactory solutions to many scheduling problems.

Evolutionary algorithms can also be used to tackle problems that humans don’t really know how to solve. An EA, free of any human preconceptions or biases, can generate surprising solutions that are comparable to, or better than, the best human-generated efforts. It is merely necessary that we can recognise a good solution if it were presented to us, even if we don’t know how to create a good solution. In other words, we need to be able to formulate an effective fitness function.

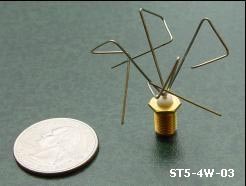

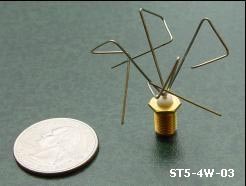

Engineers working for NASA know a lot about physics. They know exactly which characteristics make for a good communications antenna. But the process of designing an antenna so that it has the necessary properties is hard. Even though the engineers know what is required from the final antenna, they may not know how to design the antenna so that it satisfies those requirements.

Engineers working for NASA know a lot about physics. They know exactly which characteristics make for a good communications antenna. But the process of designing an antenna so that it has the necessary properties is hard. Even though the engineers know what is required from the final antenna, they may not know how to design the antenna so that it satisfies those requirements.

NASA’s Evolvable Systems Group has used evolutionary algorithms to successfully evolve antennas for use on satellites. These evolved antennas (pictured) have irregular shapes with no obvious symmetry. It is unlikely that a human expert would have arrived at such an unconventional design. Despite this, when tested these antennas proved to be extremely well adapted to their purpose.

Other Examples of Evolutionary Computation in Action

Pre-requisites

There are two requirements that must be met before an evolutionary algorithm can be used for a particular problem. Firstly, we need a way to encode candidate solutions to the problem. The simplest encoding, and that used by many genetic algorithms, is a bit string. Each candidate is simply a sequence of zeros and ones. This encoding makes cross-over and mutation very straightforward, but that does not mean that you cannot use more complicated representations. In fact, most of the examples listed in the previous section used more sophisticated candidate representations. As long as we can devise a scheme for evolving the candidates, there really is no restriction on the types that we can use. Genetic programming (GP) is a good example of this. GP evolves computer programs represented as syntax trees.

The second requirement for applying evolutionary algorithms is that there must be a way of evaluating partial solutions to the problem – the fitness function. It is not sufficient to evaluate solutions as right or wrong, the fitness score needs to indicate how right or, if your glass is half empty, how wrong a candidate solution is. So a function that returns either 0 or 1 is useless. A function that returns a score on a scale of 1 – 100 is better. We need shades of grey, not just black and white, since this is how the algorithm guides the random evolution to find increasingly better solutions.

This is the first in a short series of articles on practical Evolutionary Computation. The text is taken from the work-in-progress documentation for the Watchmaker Framework for Evolutionary Computation. The next article will demonstrate how to implement evolutionary algorithms in Java using the Watchmaker Framework.

Further Reading

Engineers working for NASA know a lot about physics. They know exactly which characteristics make for a good communications antenna. But the process of designing an antenna so that it has the necessary properties is hard. Even though the engineers know what is required from the final antenna, they may not know how to design the antenna so that it satisfies those requirements.

Engineers working for NASA know a lot about physics. They know exactly which characteristics make for a good communications antenna. But the process of designing an antenna so that it has the necessary properties is hard. Even though the engineers know what is required from the final antenna, they may not know how to design the antenna so that it satisfies those requirements.